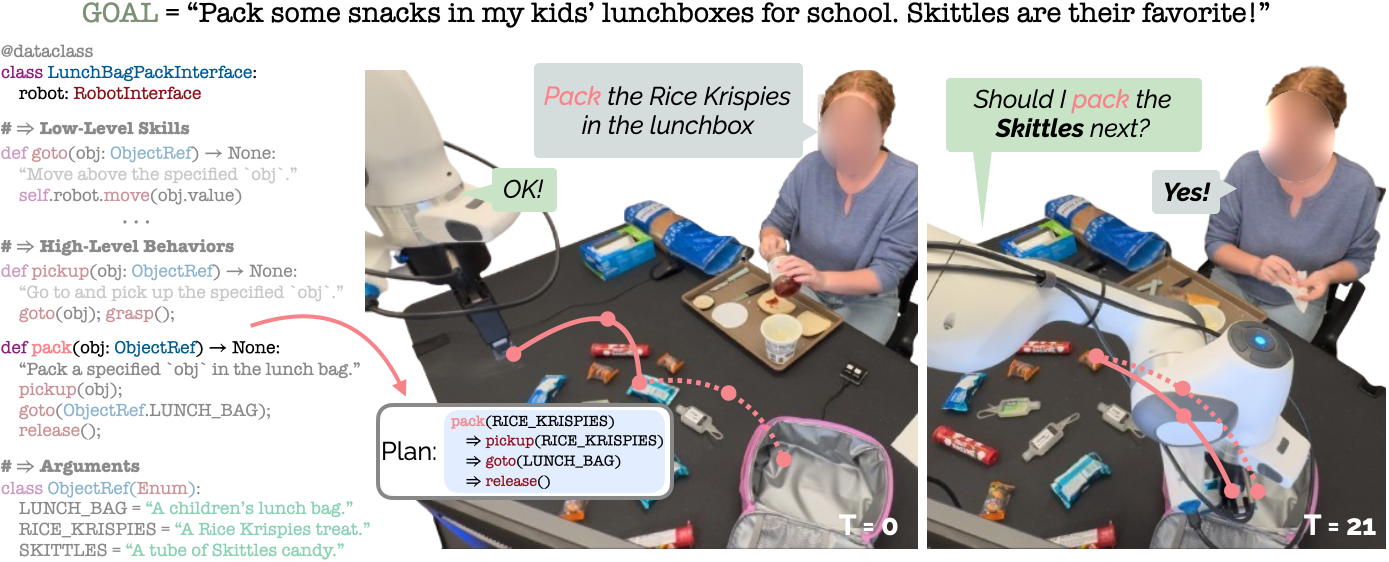

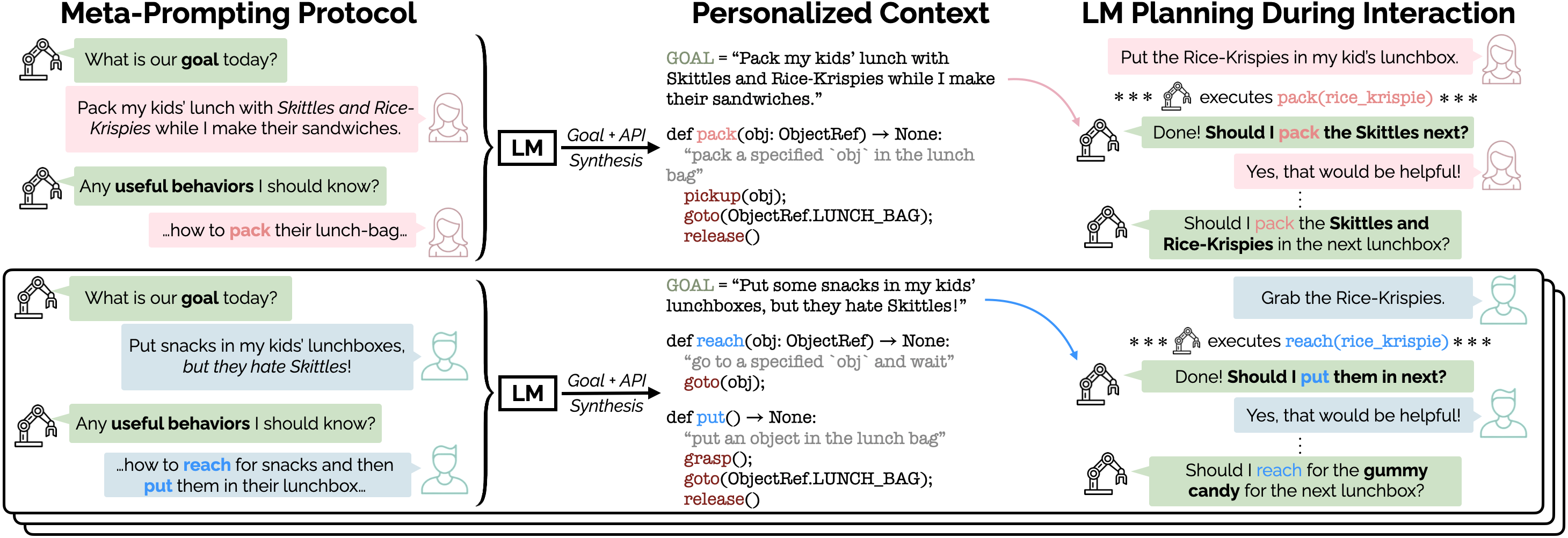

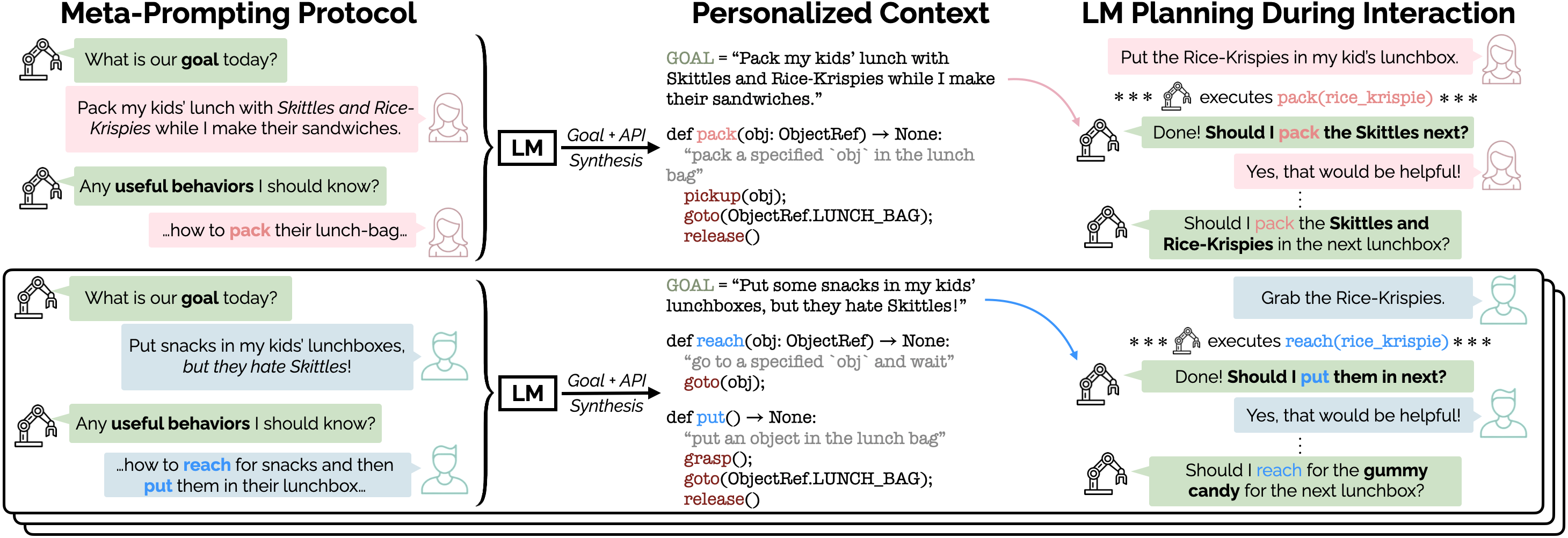

Contributions: Meta-Prompting & Proactive Planning

ProVox develops a novel meta-prompting protocol to collect two critical pieces of information from an individual: their specific goal, as well as an API of useful behaviors.

Crucially, each user has a distinct set of preferences, yielding different goals and behaviors. For example, the female user [Top-Left] wants her children's lunch to contain Skittles,

Rice-Krispies, and hand sanitizer, and teaches the robot to pack objects, with full confidence in its ability to identify, grasp, and move objects.

In contrast, the male user [Bottom-Left] is more hesitant in trusting the robot; as a result, he separates pick-and-place into two parts: a motion to reach_for an object

(moving above it without grasping), followed by a put behavior to complete the motion. The language model task planner then leverages this meta-prompted context to proactively

suggest helpful behaviors over the course of the interaction [Right].

Language Model Task Planner Prompts

In the following code blocks, we provide the actual GPT-4 Turbo (v04-09) prompts that we use for

our lunch bag packing setting:

Base Language Model API

# Utility Function for "Python-izing" Arguments as Types

def pythonize_types(types: Dict[str, List[Dict[str, str]]]) -> str:

py_str = "# Python Enums defining the various known objects in the scene\n\n"

# Create Enums for each Type Class

py_str += "# Enums for Various Object Types\n"

for type_cls, element_list in types.items():

py_str += f"class {type_cls}(Enum):\n"

for element in element_list:

py_str += f" {element['name']} = auto() # {element['docstring']}\n"

py_str += "\n"

return py_str.strip()

# Fully-Observable Environment State (Object-Oriented)

TYPE_DEFINITIONS = {

"object": [

{"name": "CARROTS", "docstring": "A bag of carrots."},

{"name": "APPLE_SLICES", "docstring": "A bag of apple slices."},

{"name": "GOLDFISH", "docstring": "A carton of goldfish snack."},

{"name": "FORK", "docstring": "A fork."},

{"name": "SPOON", "docstring": "A spoon."},

{"name": "CHEERIOS", "docstring": "A bag of Cheerios cereal."},

{"name": "MILK", "docstring": "A carton of milk."},

{"name": "SKITTLES", "docstring": "A tube-shaped red container of Skittles candy."},

{"name": "GUMMY_CANDY", "docstring": "A gummy candy shaped like a hamburger for children."},

{"name": "RICE_KRISPIE", "docstring": "A rice krispie snack treat for children."},

{"name": "HAND_SANITIZER", "docstring": "A small tube of Purell hand sanitizer for cleaning hands before a meal."},

{"name": "LUNCHBAG", "docstring": "A bag or lunchbag or lunchbox for a child."},

]

}

# Base System Prompt (note `USER_DEFINED_GOAL`)

BASE_SYSTEM_PROMPT = (

"You are a reliable code interface that will be representing a robot arm in a collaborative interaction "

"with a user.\n\n"

"In today's session, the user and robot arm will be working together to {USER_DEFINED_GOAL}. "

"You will have access to a Python API defining some objects and high-level functions for "

"controlling the robot. \n\n"

"```python\n"

"{pythonize_types(TYPE_DEFINITIONS)}\n"

"```\n\n"

"Given a spoken utterance from the user your job is to identify the correct sequence of function calls and "

"arguments from the API, returning the appropriate API call in JSON. Note that the speech-to-text engine is not"

"perfect! Do your best to handle ambiguities, for example:"

"\t- 'Put the carrots in the back' --> 'Put the carrots in the bag' (hard 'g')"

"\t- 'Throw the popcorn in the in' --> 'Throw the popcorn in the bin' (soft 'b')\n\n"

"If an object is not in the API, you should not fail. Instead, return an new object, which will be added to the API in the future. "

"Even if you are not sure, respond as best you can to user inputs. "

)

# In-Context Examples (note `USER_DEFINED_EXAMPLES`)

ICL_EXAMPLES = [

{"role" : "system", "content": BASE_SYSTEM_PROMPT},

make_example("release", "release", "{}", "1"),

make_example("grasp", "grasp", "{}", "2"),

make_example("go home", "go_home", "{}", "3"),

make_example("go to the bag", "goto", "{'object': 'LUNCHBAG'}", "5"),

make_example("go away!", "go_home", "{}", "6"),

make_example("grab the gummy", "pickup", "{'object': 'GUMMY_CANDY'}", "7"),

*[make_example(ex) for ex in USER_DEFINED_EXAMPLES]

]

Note that both USER_DEFINED_GOAL and USER_DEFINED_EXAMPLES are a result of the meta-prompting protocol

(Gradio Interface shown in overview video above). We encode each of the motion primitives as OpenAI Function Calling TOOLS

identically to Grannen et. al. 2024. We then invoke generation (without proactive planning) as follows:

# OpenAI Chat Completion Invocation - All Responses are added to "ICL_EXAMPLES" as running memory

openai_client = OpenAI(api_key=openai_api_key, organization=organization_id)

llm_task_plan = openai_client.chat.completions.create(

model="gpt-4-turbo-04-09",

messages=[*ICL_EXAMPLES, {"role": "user", "content": USER_UTTERANCE}],

temperature=0.2,

tools=FUNCTIONS,

tool_choice="auto",

)

Proactive Planning Prompt

To enable proactive planning in ProVox, we augment the above system prompt with a turn-based "trigger prompt" that explicitly

re-encodes the user-defined goal (USER_DEFINED_GOAL) to suggest helpful actions:

# Query the Language Model Planner for Helpful Next Actions

ACTIVE_PROMPT = (

f"Propose an action to perform next to {USER_DEFINED_GOAL}. "

"Pay careful attention to the goal of the task, and the previous action history. "

"Only return a single action. Try to use a more complex action if possible. "

"You do not need to go home between tasks. "

"If there are multiple possible actions, select the most useful one, and return the appropriate API call in JSON format."

)

# Auto-Prompt to Generate a Proactive Plan

llm_proactive_task_plan = openai_client.chat.completions.create(

model="gpt-4-turbo-04-09",

messages=[*ICL_EXAMPLES, {"role": "user", "content": ACTIVE_PROMPT}],

temperature=0.2,

tools=FUNCTIONS,

tool_choice="auto",

)